The Connectome Versus The Transcriptome

Where did all the trans-paradigmatic animal biology go?

Something has gone wrong with our animal biology. No, I don’t just mean that we have spiteful mutants living among us trying to assassinate Presidents; nor am I talking about how endocrine disruptors are making for more femcels. I’m actually referring to the “-ology” part of the question. That is to say, the way we study life, including the models we use to describe it and the technical practices around engaging with it, have stalled out.

I’m not knowledgeable enough to diagnose (ha!) all of our society’s epistemic issues, but I feel comfortable stating that to make advances from here, biology, in particular, must start to take a more aggressively trans-paradigmatic view. My small contribution to this venture will be to quasi-transcribe and quasi-commentate on one particular exchange between Joscha Bach and Michael Levin on Curt Jainmugal’s podcast “Theories Of Everything”.

I’ve been a huge fan of these gentlemen for a while, so when I saw this episode appear on my feed a year ago featuring all three of them, I practically creamed my pants. Of course, listening to their discussion exceeded even my high expectations and I’ve been referring back to their dialogue here and there ever since. Finally, I’ve been able to take some time to explain some of the cool tidbits from this dialogue that have gripped me.

What’s useful in science is not answering questions, but discovering new questions. Reducing uncertainty is much easier than finding new areas of uncertainty that provide insight.– 54:24

We will take some fun detours, though, because discussing biology should be a joyful journey that includes memes and art.

Mea Fraternitas Fabri

Every once in a while, I’m asked by some of the men in my small little town to help out with a volunteer carpentry project to fulfill someone’s needs in the community. These range anywhere from a day-long effort to build some desks for someone’s office; to a week-long effort to build a wheelchair ramp at a house for someone who’s recovering from a bad car accident; to a month-long effort to build and stain an entire new set of bookshelves for our pastor who lost everything in a flood.

I sign up for these efforts because I’m interested in becoming a better tradesman myself, but what fascinates me much more is the different social coordination techniques these (usually older) guys employ to complete such projects. For the most part, and unlike me, none of these guys are former technical project managers. Even if they have some management experience, they’ve been retired for years, so all their community organization skills in this context are deployed on the fly.

At the most general level, I’ve noticed that a combination of two separate social coordination approaches end up emerging and that they are strategically combined to craft our wooden treasures successfully. Doesn’t matter which guy leads the effort initially, doesn’t matter which particular skills each other guy brings, doesn’t matter the terrain nor the weather – the coordination efforts always take on a similar shape.

The first mechanism involves the project’s head honcho sharing his overall agenda with everyone and ensuring we all understand his vision. From there, he deploys subgroups of workers to complete the subtasks of the project according to these predetermined specifications. This sharing step happens usually once and no more than three times; it is fairly unmeticulous, too. Hardly any blueprints are ever exchanged, though some notes are scribbled on scraps of paper here and there.

The second mechanism occurs while individual subgroups are working. As they encounter difficulties or setbacks – for instance, running out of materials or needing further measurements – they communicate their needs either within their own working subgroup or to different subgroups, but not to the head honcho. The subgroup then completes the given task upon receiving the required help. In exceptional circumstances (ahhh, we started building a deck on top of a ditch, oops!), the original plans must be reconfigured. This is rare.

None of the carpentry projects fit into some exact linear combination of these two paradigms. Construction and life are messy. Nevertheless, I’m pointing out a distinct amalgam that starts with a basic planned, centralized approach but proceeds and finishes through a spontaneous, non-centralized approach.

Where else can we witness this duplex mechanism arising and how can we test that it works this way?

The Implementability Mindset

If you’ve ever studied advanced mathematics or philosophy, there are two different schools on what types of logic are valid. Classical logic operates according to standard deductive logic, which allows one to prove things via contradiction or absurdity1. For example, an argument of this form may look like:

Assume that bananas don’t exist. This implies through steps [1], [2], and [3] that volcanoes spew hot lava when they erupt. This further implies through steps [4] and [5] that the color purple is fake. However, purple is clearly real! Thus, bananas must exist.

Carefully note that in this example, the author didn’t really prove the existence of bananas. All they showed is that if bananas don’t exist, then it leads to some weird incoherence about the color wheel. They haven’t shown me what a banana is or its essential properties.

On the other hand, constructivist logic states that in order to prove something true, or show its existence, you actually have to construct it. Taking this second approach forces you to adopt much more of an “algorithmic” or “engineering” mindset because it doesn’t rely on the laws of contradiction or excluded middle. The only way to convince me that bananas exist is to grow them according to a process I can verify or reproduce myself.

The overarching claim made by Joscha – who is an elite computer scientist and is therefore partial to this “implementability mindset” – is that mainstream biology has lagged in its discoveries because it has stopped pursuing implementation as its operating means for discovery; and instead has sunk into promoting “evidence-based” generation of “theories” whose methodologies are based on classically-derived assumptions and social desirability factors such as peer review, and whose predictive results are usually influenced by underhanded statistical fakery.

“What do you care about what stupid people think?”, the absolute best part of this YouTube video at 52:48

The Story Outside Synapses

Nowhere is this preference for methodology over implementation more clear in the field of neuroscience.

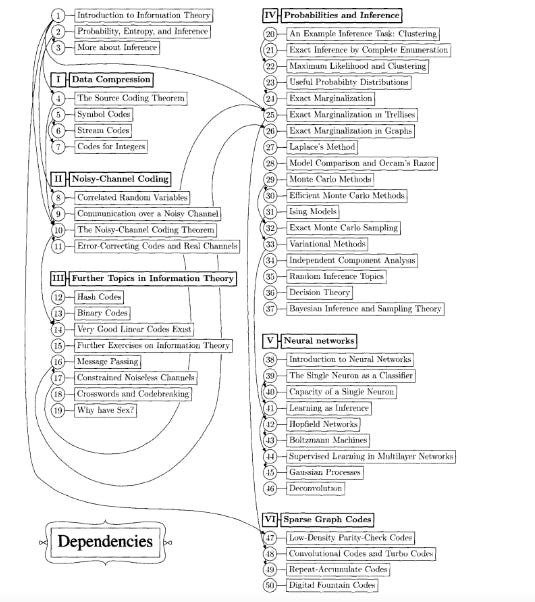

The current mainstream view in neuroscience is that all information about how neurons store and propagate appropriate responses to a variety of external stimuli is located exclusively in the pattern network of synapses and its unique physical configurations within a given organism’s brain. In other words, the claim is that neural memory lives entirely within the connectome. Most attempts to perform research that fall outside the narrow methodologies offered by this connectome-exclusive hypothesis are considered illegitimate.

However, Micheal provides a powerful counter-example to this view in the example of trophic memory in deer antlers. Deer antlers have to be regenerated yearly, and as they are chock full of neurons, they make for an ideal neuroscientific study subject. Indeed, as discovered, one can cause small injuries to the deer antlers at certain places. These injuries are still reproduced in the precise location as the old ones after the new set comes in.

If injury occurs at a particular point in the branched structure of the antler, it makes a small callus and heals; the rack will be shed as normal, and next year, a new rack will grow, with an ectopic tine (branch) at the location where the damage occurred in the previous year. …

The effect disappears after a few years and they go back to normal.

If the entire neuronal memory structure were located exclusively in the connectome, then there is no way that the new sets of antlers would be able to replicate the injury in the subsequent generations of antlers. This quite conclusively proves the connectome-exclusive view inadequate. However, as we’ve established, proving something false is not sufficient. We must also find out what is true. Thus, it is incumbent on us – as good scientists – to determine a higher-fidelity model of how this type of memory is stored and propagated.

RNA-Based Memory Transfer

If the memory store for nervous system responses is not located only in the connectome, then what are some alternative explanations? Turns out that there is a pretty good case to make that this information is stored in the transcriptome; specifically in messenger RNA.

As it happens, you can train both rats (as described in the above video) and snails (as described in the above paper) to respond to stimulus Y with behavior X, extract the RNA from these individuals; replicate it; inject it into untrained individuals; and the latter will start responding to stimulus Y with the same behavior X without any training.

[M]emory storage involve[s] these epigenetic changes—changes in the activity of genes and not in the DNA sequences that make up those genes—that are mediated by RNA.

This view challenges the widely held notion that memories are stored by enhancing synaptic connections between neurons. Rather, [it it seen that] synaptic changes that occur during memory formation as flowing from the information that the RNA is carrying.

Now, in both of these cases, the stimuli and responses being transferred are evolutionarily conserved rather than evolutionarily random. That is to say, fear of the dark (rats) and withdrawing from painful shocks (snails) both have a long evolutionary history across many species.

Nervous systems are rather recent emergences in the grand history of life, so most of them share a lot in common. In particular, the chemical composition of action potentials in all eukaryotic organisms is quite similar, even across differing phylogenies. Remember to get enough calcium and potassium kids!

This is crucial because, as Michael points out, the more evolutionarily conserved a behavior is, the more likely it is to successfully transfer via RNA because the decoding machinery exists via common ancestors in all the downstream species. When you introduce RNA into an untrained organism, you don’t do it in a location-dependent manner. You kind of stuff it all in there, let the brain bathe in it, and update the transcriptome in every single cell.

Suppose instead that you trained a monkey to perform a dance every time it tasted blue cotton candy; even if you transferred the RNA from this training set to an untrained monkey, it would be far less likely to replicate the behavior because the lookup tables or translation guide2 for it is not part of the larger mammalian transcriptome.

Computers Are Our Friends

Alright, so, this is all a nice discussion, but to return to our implementability mindset, one of the best ways to test out a connectome-based versus a transcriptome-based approach to nervous system training is to write a program.

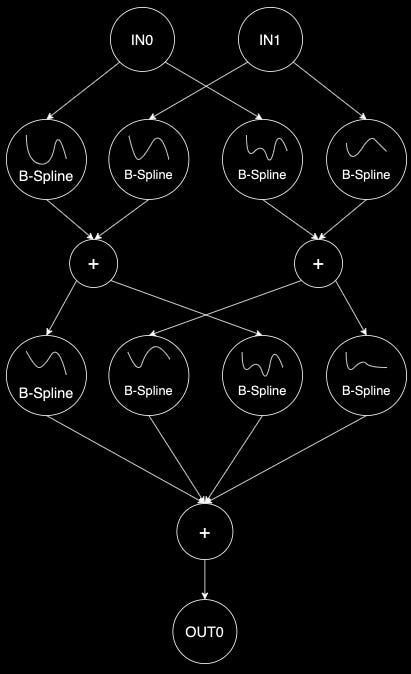

Perceptrons, or standard “neural networks”, are considered to be modeled after groups of human neurons, but they are modeled on the connectome-exclusive model of memory and training. They manage to train each node in the network on a local function over its neighbors, which places a premium on the integrity of the “synaptic” structure and strength; which means the way perceptrons work is fundamentally different than the ways the nervous system actually responds to stimuli, as Joscha points out, and I’ve now explained in more detail.

A computing interface based on the more inclusive transcriptome model would mimic the fact that trained responses to different activating factors in the connectome are learned and then stored as different RNA strands; and that groups of neurons selectively apply these global RNA-mediated functions at different edges of the nerve network as a neighborhood – exactly the way the deer antlers reproduce their injuries with spatial precision.

This modeling is precisely what some machine learning folks are starting to do! One alternative model to the standard Multilayer Perceptron (MLP) model of neural networks is called a Kolmogorov-Arnold Network (KAN). These KANs don’t train individual nodes with fixed activation functions; instead, they teach the network learnable activation functions on their edges, which more closely resembles the type of RNA-mediated learning that guides groups of neurons (“neighborhoods”) to respond collectively to activation fronts in the environment.

These dynamic global activation functions, when coded in software, are called splines – a fancy term for “piecewise polynomial”, i.e., continuous but irregular curves.

Now, at this point, you might be reading and thinking, “This wonderfully complex machine model is too perfect! It must have some gaps or flaws.” And you wouldn’t be wrong. The fact is, laymen programmers have tried to put KANs into practice and have found them them to be wanting. What’s notable, though, is that the main criticism given is that KANs require far more training data and are much slower to train than MLPs.

Well, this is largely true of people, too, isn’t it? Your average person takes multiple years to learn proper image recognition whereas a Keras or PyTorch module put together in one day outperforms every single six-month-old on the planet.

Perhaps there is room for the implementation of KANs to improve, or else, we are still missing key parts of how activation functions are generated, remembered, and applied. Either way, this investigation opens up more interesting questions to explore – which, again, is the whole point of science.

Morphological Competence

Up until now, we’ve profaned the connectome and exalted the transcriptome. It’s about high time we do the reverse now, right? That would only be fair.

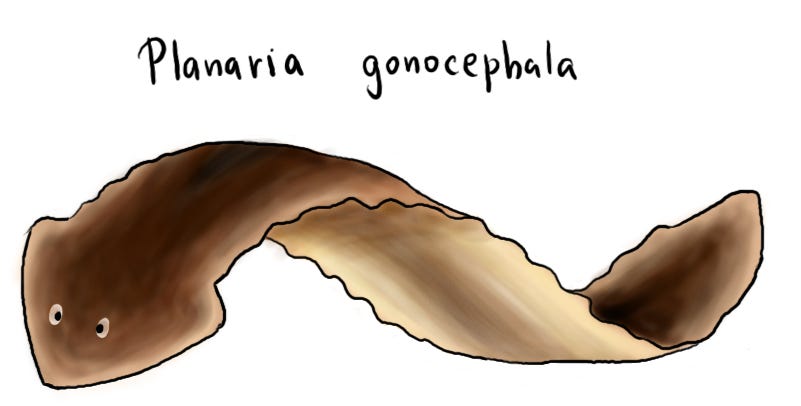

If the transcriptome has so much power over the development and activity of an animal’s nervous system, then one would expect that the individual genome would need to be perfectly regulated for the brain and spine to work well. This belief is reflected in a concept called Wiesmann’s barrier which claims that the inherited genome sequence alone is sufficient to directly determine phenotype. This insistence on transcriptome-purity has taken hold in much of genomics research and presents a limited picture at best. To demonstrate its gaps, we can look to our favorite flatworm.

If you didn’t already know: the genome of Planaria worms is horrendous. As Michael explains, they are almost entirely mixoploid, so most of their cells have wildly different numbers of chromosomes, and each chromosome contains hundreds of accumulated mutations. That means these creatures are essentially slimy, crawling tumors.

And, yet, not only does the anatomical structure of these worms replicate perfectly every generation, the worms themselves are highly resistant to cancer. What gives?

Michael has just published a paper, with a rigorous underlying computational model, outlining a notion of morphological competence that illustrates how organisms retain signaling and information processes in the connectome that promote proper anatomical development even in the presence of loads of trash DNA.

This morphological competence operates on the principle of “stress-sharing”. If one part of the anatomy – or even a single cell within a sub-anatomical structure – is not able to find proper placement, it sends out a distress call that is absorbed by its neighbors. The neuronal neighbors then treat this distress call as if it were their own and re-organize to address the inadequacy until it is fixed before moving to the next developmental stage.

Joscha completes this line of reasoning with a hardware analogy. If your laptop contained a hard drive that was constantly bit flipping (corrupt transcriptome), it would be impossible to write or run applications on it. However, if on top of the disk, there was a virtual layer that was constantly running low-level error correction (stress-sharing in connectome), then the computer would function normally even with its corrupted bit stores.

Thus, evolutionary selection pressures are not characterized by “survival of the fittest” but by “survival of the fittest-appearing”. Indeed, it seems that Planaria is an example of this principle taken to extremity – it has developed such a mighty morphological competence that its massive mutational load is irrelevant. Granted, nematodes are a world apart from primates, but the same principle has been demonstrated in the embryonic development of frogs (a much closer relative of ours), too.

On a more serious note, this harkens back to the need for treating ideas in a trans-paradigmatic matter. While neuroscientists worship axons and glia, and geneticists worship the nucleus, proper scientific inquiry tries to look at the same reality from both lenses. Moreover, robust biological models incorporate both paradigms and are verified by their implementability.

This is the reason why my carpenter mentors are so impressive. The genius of producing functional (and beautiful!) furniture lies neither in the integrity of blueprints nor in the strength of communication between laborers. Each system works to make up for disparities in the other.

Speed Dating and Superpowers

Recently, I attended a speed dating event with about 100 guys, and one of the suggested icebreakers was to ask the person in front of you, “If you could have any superpower, what would it be?” Most of them gave pretty standard answers like “the ability to fly”, “invisibility”, or “mind reading”. Some gave slightly interesting answers such as “heat vision” (that guy was a welder) and “flexible bones” (that guy was a dancer and circus artist).

I did not have a great answer, but upon closer reflection, I think I would want to have the power of the transcriptome applied to everyday situations. With it, I’d be able to quickly internalize the hard-earned lessons of everyone I ever meet just through transference. The RNA of everyday life consists of the random expertise and talents collected by those around you through their vast and varied experience. Think about all the wisdom you could absorb with this supernatural transcriptonomic ability!

“Why not the power of the connectome?”, the astute reader might offer as a counter – a fair question. After all, the connectome of everyday situations takes these learned proclivities and activates them judiciously in the face of obstacles, which is an invaluable quality to possess.

To quip back, I think I would want my would-be husband to have that power instead of me. You see, this would make us quite complimentary. As we go on our many wild adventures throughout life, I could be the one to privately collect a library of wisdom tools, and he could be the one to publicly deploy them as the challenging conditions that we face require from us.

Perhaps, on our future wedding registry, instead of asking for the classic ‘His & His’ towels, we’ll ask for the phrases "‘Chief Transcriptome Officer” and “Chief Connectome Officer” to be embroidered in silk. That would definitely make them a more meaningful family heirloom.

As a side note, most analytical philosophy is heavily influenced by classical logic, which is why it is mostly a trash field with trash arguments.

This is one of the likely explanations for why the mRNA COVID-19 vaccines were of questionable efficacy in humans.

Lately I have been looking into the connections between various theories of personality and various theories of how the brain works to try and come up with a new over-simplified way of understanding the connection. One potentially useful binary is the connection between local and global processing in the brain.

For example, if you squint just right you see that men tend to have more local processing power and woman have more global connectivity in their brains. If you squint even harder you might find that autism kind of looks like an over-emphasis on local processing and schizophrenia almost looks like too much global connectivity.

But this is all about the connectome...would your characterize the transcriptome as relating more to local or global computing?

Reminds me of the stories about personality shifts post organ transplantation. A cursory look at some papers and it doesnt look like the research is robust yet, but perhaps personality is conserved and transferred as a result of transcriptome memory. Even beyond that I know there are ongoing efforts by some researchers to bridge GRN research with psychotherapeutic modalities. Thanks for the article.